Recent advancements in artificial intelligence technology have raised significant ethical and legal concerns, particularly in the realm of child protection. The alarming capacity of AI tools to generate child sexual abuse material (CSAM) poses unprecedented challenges for law enforcement and societal norms. Understanding the gravity of this situation, the UK government has announced sweeping new legislation aimed at combating the creation and distribution of these disturbing AI-generated images. The impetus for these legislative reforms stems from evidence that AI-generated abuse images are proliferating at an alarming rate, prompting urgent action to safeguard children.

The recently proposed legislation seeks to make it illegal to create or distribute AI tools explicitly designed for generating CSAM. This groundbreaking move is not merely a reactive measure; it aims to address the roots of the problem by targeting the means of production itself. Offenders caught in possession of these AI tools could face penalties of up to five years in prison. Furthermore, the law will extend to individuals possessing AI “paedophile manuals.” This includes texts that instruct individuals on how to exploit AI technology to engage in child abuse; such offenses could lead to a three-year prison sentence.

Jess Phillips, the UK’s safeguarding minister, emphasized the pioneering nature of this legislation, highlighting that the UK is positioning itself as a leader in the global fight against AI-facilitated child exploitation. Phillips articulated the necessity for international cooperation, stating that while these legislative measures are crucial, a truly comprehensive approach to eradicating child exploitation must include collaboration with other nations.

AI-generated abuse imagery has been used in various malevolent ways, including “nudeifying” real images of children or digitally manipulating their faces onto existing CSAM. Disturbingly, some victims—children who have unwittingly bestowed their image online through platforms like Instagram—have reported feeling targeted and terrified. The NSPCC’s Childline has documented distressing cases, such as that of a 15-year-old girl whose private images were manipulated, making her feel profoundly unsafe and vulnerable. This manipulation has not only psychological ramifications but also opens the door to potential blackmail and further exploitation of the young victims.

The government’s recognition of these dangers signifies a substantial shift towards a proactive stance on child safety in the digital realm. By acknowledging the role that AI tools play in facilitating grooming and abusive behavior, authorities are finally taking steps to confront this modern menace head-on.

A significant aspect of the proposed legislation is its focus on the networks that enable exploitation. Predators operating websites dedicated to sharing CSAM and grooming techniques will now face the prospect of longer prison sentences, up to 10 years. This specific focus aims to hold both content creators and distributors accountable, addressing the systematic nature of child exploitation that exists within certain digital communities.

Adopting a multi-faceted approach, the law also gives the UK Border Force new powers to mandate that suspected predators grant access to their digital devices. This measure is designed to act as a deterrent against individuals who may use encryption or other means to hide their illicit activities, thereby enhancing the ability of authorities to safeguard vulnerable children.

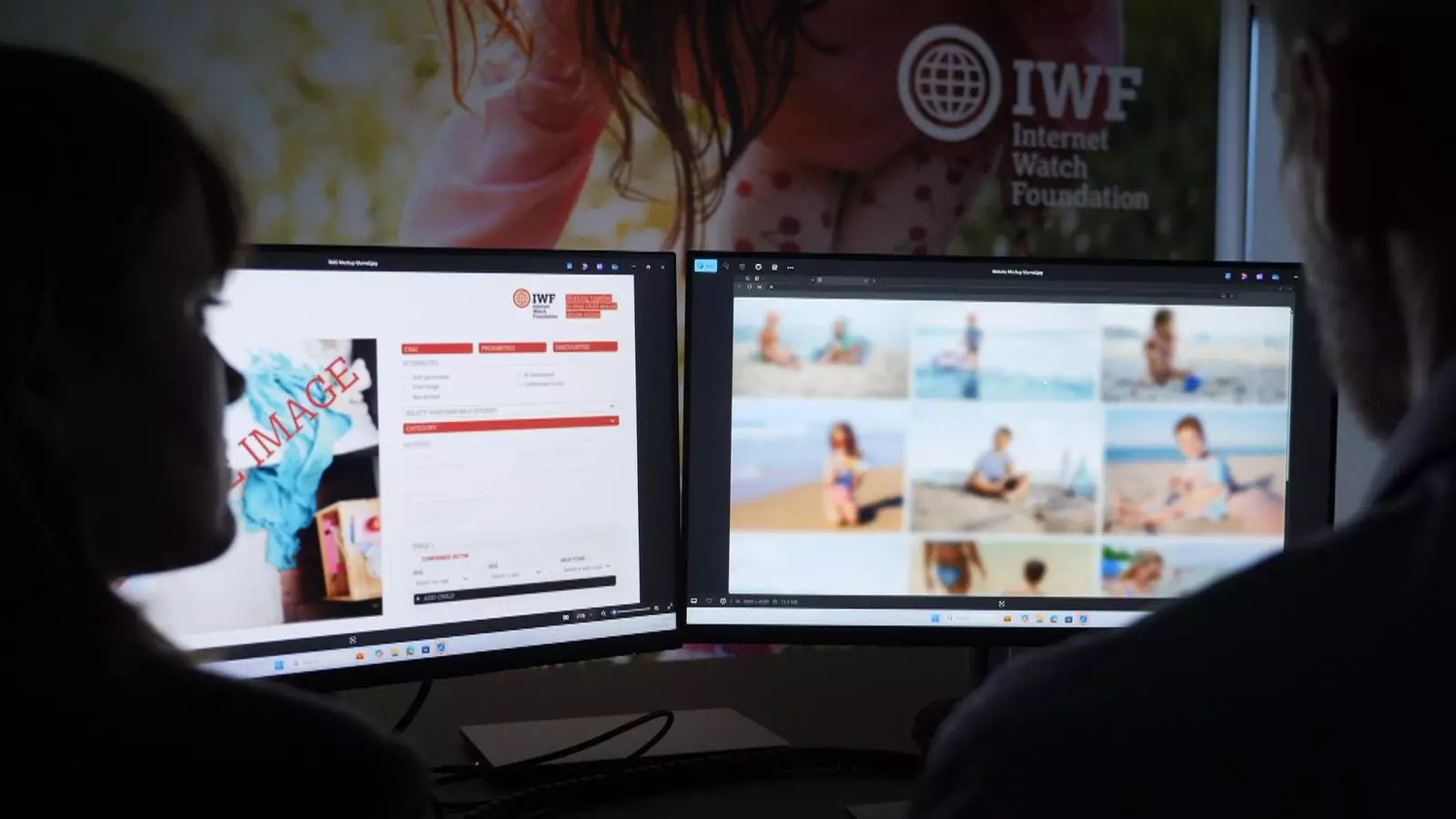

Reports from organizations such as The Internet Watch Foundation (IWF) highlight an alarming increase in the prevalence of AI-generated CSAM. In a recent analysis, 3,512 instances of AI-generated abuse images were identified on a single dark web site over a span of just 30 days. This marks a 10% increase in the most severe category of abuse images compared to the previous year, raising crucial questions about the effectiveness of existing safeguards for children.

Derek Ray-Hill, the interim chief executive of IWF, cautioned that certain AI-generated images are strikingly realistic, making it increasingly difficult to discern between real and fabricated images. This blurring of lines presents challenges not only for legal enforcement but also for societal perceptions of child exploitation.

As the UK sets forth with this ambitious legislative framework, it underscores the urgent need for a collective, global response to the threat posed by AI-generated child exploitation. The government’s actions send a clear message: combatting this heinous crime requires not just legislative change, but also societal awareness, education, and coordination among nations. Children’s safety remains a paramount concern, and it is imperative that all stakeholders, from government entities to tech companies and the public, work together to dismantle the networks that perpetuate abuse and ensure a safer digital future for the most vulnerable members of society.

Leave a Reply